“There is increasing concern that in modern research false findings may be the majority or even the vast majority of published research claims.”

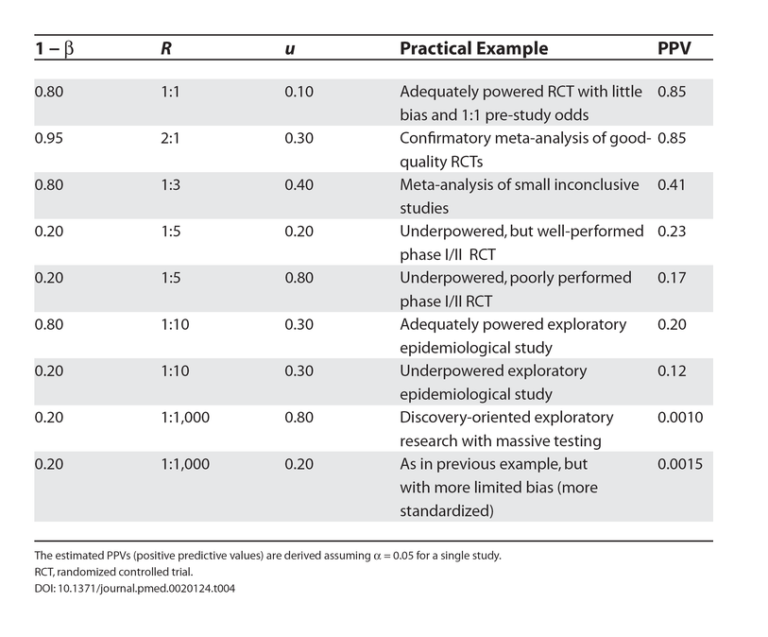

In this essay from 2005, Dr. John P.A. Ioannidis explains that the majority of modern “science” is unscientific. He notes that when a range of mitigating factors are accounted for, the majority of published “statistically significant” findings are likely to be untrue or unverifiable. Factors degrading the reliability of published research include small studies, small effect sizes, flexible study designs and financial incentives (which introduce bias), and a larger number of researchers working within a field.